Social Development of Statistical Methodologies

In order to open up the questions that concern us about the use of statistical data to shore up arguments being made by legal equality advocates, it is useful to put our critiques of statistical methodology in the context of the existing critical conversations about how methods develop in and through social and political relations. 1 Here, we briefly review critical analyses of categorization, counting, and statistical methods; we explore some examples of the political expansion of these methods; and we introduce some ways of thinking about statistical methods, and the invention of the norm, specifically, as co-constitutive with processes of political organization.

It is important to understand that the collection and statistical analysis of data does not simply record or observe existing phenomena, but rather that analytic methods emerge in the context of social development. 2 Ian Hacking investigates categorization and study of people, saying, “[People] are moving targets because our investigations interact with them, and change them. And since they are changed, they are not quite the same kind of people as before. The target has moved…. Sometimes sciences create kinds of people that in a certain sense did not exist before.” 3 Fundamentally, counting a set of objects with multiple sources or contributing individuals requires that the objects be grouped into agreed-upon categories, which might be delineated differently depending on the interests of the counter. These categorical constructs, which emerge from, produce and reproduce social assumptions, are required for the meaningful concept of a number to be shared among people and arise alongside other technologies for sorting and managing populations and resources.

In his book Seeing Like a State, James C. Scott describes how the standardized collection of data constitutes and produces governing capacity for states and how the rise of standardized collection of data creates a new kind of “stateness” that characterizes modern nation-states. He looks at the evolution of those practices where local methods and measures are replaced by standardized ways of naming people, measuring land and crops, creating a standard national language, standardized ways of owning property, and the like. These processes of standardizing a certain way of seeing and inventorying things occurs both within colonizing countries that force the replacement of local customs with national standards on their own people and in processes of colonization where ways of organizing life through the terms of the colonizer—whether that is naming practices, land ownership schemes, family formation norms, racial categories or gender categories—are forced on colonized peoples. 4

Scott offers the example of the standardization of measurement in France in the context of Napoleonic state-building to illustrate how stateness changes with the administration of standardized norms. 5 During this period, local practices of measurement were replaced by national standards. Prior to standardization, every village had its own pint or bushel size, its own rules about measurement, which were often manipulated by feudal lords to increase their ability to extract from peasants. The move to a standardized system of measurement facilitated trade and the extraction capacities of the central government. It was advocated by members of the Third Estate who were tired of being taxed unfairly by nobles who manipulated measures to extract higher rents. Yet it was also widely resisted by people who were used to their local measures and wanted to retain them. Local ways of measuring often fit local needs better than national measures. The significant tension surrounding the enforcement of national standard measures and the replacement of local practices of measurement often resulted in uneven implementation of national standards and outright resistance.

The idea of “equality” that was emerging during that time, which we see represented in the documents of the French Revolution and the Enlightenment more generally, was centrally about this new uniformity—about an idea of national citizenship that imagined:

a series of centralizing and rationalizing reforms that would transform France into a national community where the same codified laws, measures, customs and beliefs would everywhere prevail…. [It imagined] a national French citizen perambulating the kingdom and encountering exactly the same fair, equal conditions as the rest of his compatriots. … This simplification of measures, however, depended on that other revolutionary political simplification of the modern era: the concept of a uniform, homogeneous citizenship.” 6

The fairness and equality called for during this period required new legal and administrative systems that aimed for uniformity and standardization that could produce a new legibility and a new ability to see and manage the country—resources and population—from the position of the central government.

Standardized measurement and counting procedures produce new kinds of governance that emerge with those regimes of knowledges and practices. In addition to illustrating this process of standardization and the production of new forms of stateness through the example of standardization of measures, Scott traces this process in the standardization of land tenure. Looking at a variety of contexts, including post-revolutionary France and Russia in the 1860s, he describes how land ownership changed as common land use was eliminated and new forms of land tenure were implemented. Common land use schemes typically involved villages determining the shared use of common lands between villagers in highly local, complex, and frequently changing arrangements. The elimination of the commons and the enforcement of “free hold estate” regimes where land belonged to a single owner rather than to whole villages made property relationships more legible to government, making it easier to extract revenue. Through the standardization of land tenure, a new relationship between individuals, populations and the government was established, in part because government gained new ways of counting, assessing, and knowing about land and therefore governing its use and users.

Enforcing standardized private-property regimes, methods of identity surveillance, family and gender norms, state-approved language and customs, and the like on indigenous people, of course, is central to processes of genocide and settlement that are ongoing in North America, the Pacific, Palestine, Australia, and elsewhere. The processes of producing “stateness” though colonization impacts its pace and methods, but Scott suggests that producing “stateness,” whether in a colonized territory or not, requires the forced replacement of local knowledge and practices with state norms. The processes that Scott describes, where local practices are eliminated and replaced by standardized practices and new ways of gathering data, can be understood as “state-building” practices that are ongoing, although the historical examples he uses regarding weights and measures and land ownership point to particular historical developments in this form of governance. Scott’s work helps expose how “the norm” emerges and circulates as both a statistical concept and a social concept and how the two co-constitute one another.

Ian Hacking’s work is useful for examining how the emerging standardized data collection and administration of categorical norms described by Scott further develops to produce the kind of governmentality described by Foucault as being characterized by state racism. Hacking’s work helps expose how math is an active force, not just measuring what exists but organizing social reality. Hacking argues that large-scale counting projects are launched to understand and alter some particular quantity, like maximizing profit or decreasing disease. He describes how the years between 1820 and 1840 were the period when we first see what he calls the “avalanche of printed numbers” which focused on surveying deviance. 7 During this period, the European reading public moved from non-numeracy to numeracy. The cholera epidemic and a purported “crime wave” in Europe were both reported and addressed with policies developed through the lens of statistical data. Reporting on these phenomena familiarized the public with statistics and numerical thinking as a way of conceiving of threats and dangers.

The identification of population-level risks in the form of threats and drains was a new way of understanding and constituting the nation. Statistical methods, essential for the interpretation of these population-based quantitative figures, produced new categories of people and events and new forms of knowledge and governance. Hacking suggests that the new legibility of the population through the advent of popular statistics transformed the social context by inventing and formulating new problems and subpopulations. Hacking writes, “enumeration demands kinds of things or people to count,” 8 asserting that “many of the categories we now use to describe people are byproducts of the needs of enumeration.” Hacking argues that the “human sciences … are driven by several engines of discovery, which are thought of as having to do with finding out the facts, but they are also engines for making up people.” 9

We think of many kinds of people as objects of scientific inquiry. Sometimes to control them, as prostitutes, sometimes to help them, as potential suicides. Sometimes to organise and help, but at the same time keep ourselves safe, as the poor or the homeless. Sometimes to change them for their own good and the good of the public, as the obese. Sometimes just to admire, to understand, to encourage and perhaps even to emulate, as (sometimes) geniuses. We think of these kinds of people as definite classes defined by definite properties. As we get to know more about these properties, we will be able to control, help, change, or emulate them better.

The development of methods for gathering standardized data and the rise of certain counting methods enabled taxation, military conscription, and police work on a national scale and as a centralized practice. These methods produced new governing capacities for states—they created the forms of governance we now identify as states. The use of counting shifted with the invention of the norm to a use of calculation to seek averages, to assess risks at the population level, to imagine the population from a racialized perspective that seeks to identify and eliminate perceived threats and drains. Foucault, of course, describes the operation of biopolitical power and the role of state racism—the cultivation of the life of the population that requires the continual identification of populations marked as threats or drains such that the national population can be made to live while the perceived internal enemies can be left to die or killed in any number of ways. The assessment of risk at the population level is characteristic of biopolitics. Categories of deviancy and illness are co-constitutive with development of statistical methods to describe a population, and the move toward population management and control is what characterizes nation-state governing capacities.

Statistics and Eugenics

The process of producing categories of people for scientific examination, evaluation, and sorting that Hacking describes can be seen in the history of the creation of statistical methods. An examination of the key figures that developed statistical methods sheds light on the connection between population management, racialization, data collection, and statistical analysis. Quetelet, Galton, Pearson, Fisher, and other developers of statistics were leaders of European eugenics movements. 10 As discussed below, early eugenic movements varied widely in their specific goals and the means employed to achieve them, but promoting life and reproduction for some defined, desired populations and/or suppressing reproduction of undesired populations was a unifying aim of eugenics. The development of statistics is essential to define desired/undesired populations and perform eugenic projects.

The Gaussian Distribution

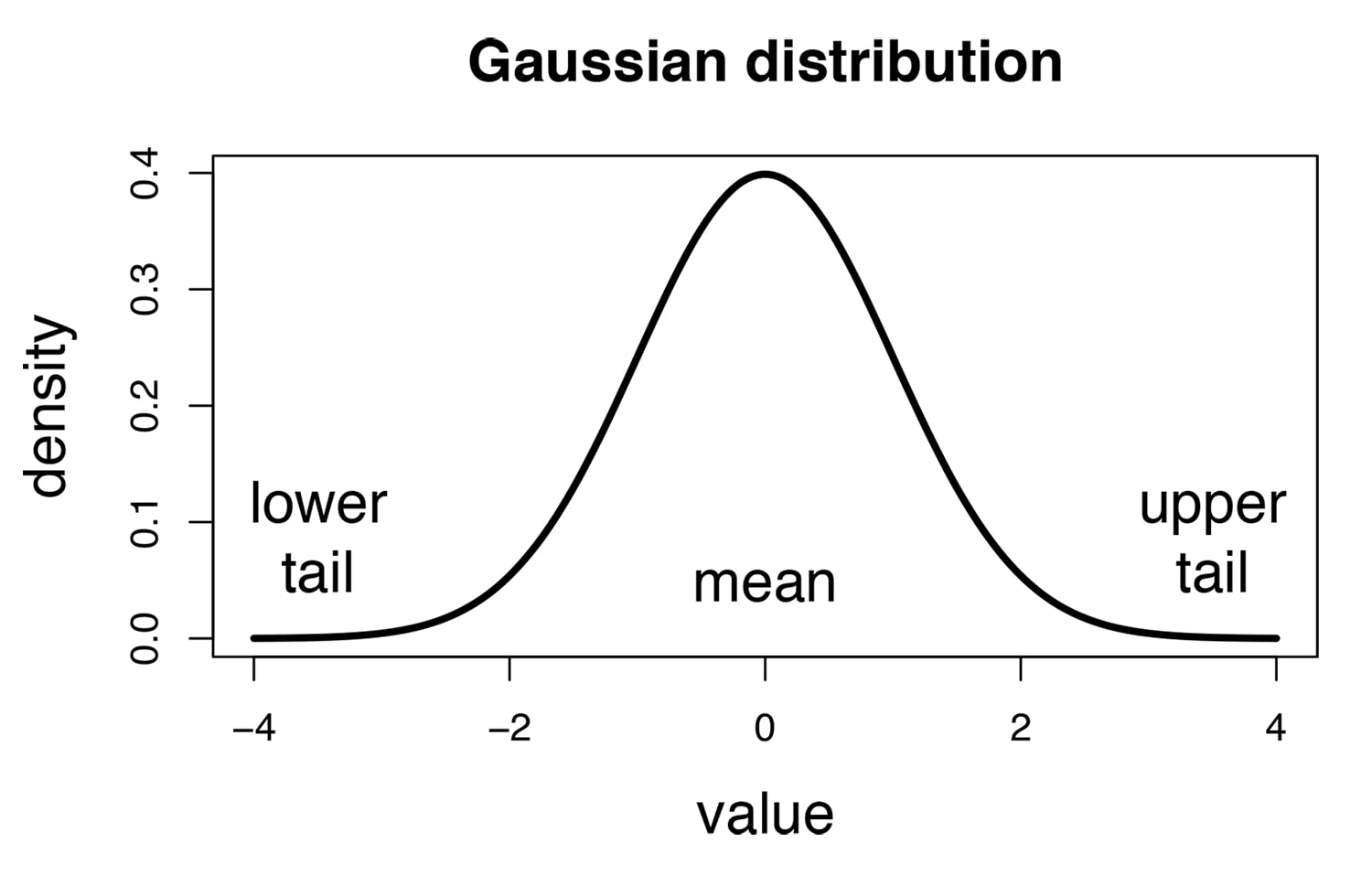

During the same period that the metric system was beginning to be enforced in France, Carl Friedrich Gauss, sometimes called “the Prince of Mathematicians,” invented a method of describing the behavior of a random variable whose values tend to fall around the average value. This is now known as the Gaussian distribution, normal distribution, or sometimes called the bell curve, and is represented in Figure 1.

A standard Gaussian distribution is shown such that a random variable following the distribution has probability proportional to the height of the curve of having any particular value. The mean (most probable value) and upper and lower tails (less probable values) are labeled.

In 1835, Adolphe Quetelet applied the idea of the Gaussian distribution to describe groups of people, calling these new descriptions of society “social physics.” Quetelet proposed that a Gaussian distribution could be used to analyze the distribution of people in a group and that the mean of this distribution represented the group “norm.” Quetelet was a teacher, mathematician, and astronomer and as such worked with the Gaussian distribution to estimate measurement errors in astronomical estimations. 11 He also took an interest in the quantitative study of human populations, particularly in rates of birth, death, and marriage, body shape and size, and “moral” behavior (such as rates of marriage, suicide, and crime). 12 Quetelet was familiar with astronomical measurement error and worked to promote standardization of measurement and categorization in both astronomy (i.e., time, distance, star type) and in “social physics” (i.e., height, weight, married, single, suicide, natural death). Quetelet strove to “perfect” the census and enable the study of “social physics.” 13 In one of the first efforts to quantitatively analyze human populations, Quetelet applied the Gaussian distribution to describe social observations, which controversially challenged the idea of free will and suggested that the behavior and physical attributes of human populations could be predicted. 14 Through his work, particularly his invention of the body mass index (BMI), which was motivated by the observation by actuaries that more death claims were reported for so-called obese policy holders, Quetelet gave the concept of the norm the status of an eugenic ideal that should be promoted by the state over other ways of being. 15 In his interpretation, deviation from the mean is considered as an error, so social deviance is devalued and discouraged. 16 For example, Quetelet observed that “regarding the height of men of one nation, the individual values group themselves symmetrically around the mean,” 17 following a Gaussian distribution. 18 He interpreted the mean of individual heights to define the ideal height for a group, where unusually tall or short individuals (individuals in the distribution tails) 19 were too tall or too short. 20 Quetelet went on to argue that differences in mean height between groups serve as evidence that race is rooted in fundamental differences between “peoples.” 21 Quetelet’s preference for rankable categories and his aversion to the unusual were extended to the interpretation of a probability distribution. Through Quetelet’s intervention, we can see that with the development of the norm “comes the concept of deviations or extremes.” The idea of the statistical norm “divides the total population into standard and nonstandard subpopulations.” 22

In the late 1800s, prominent eugenicist and statistician Sir Francis Galton invoked a Gaussian distribution to model social classes defined by earned income and argue that they could be attributed to differences in “genetic worth.” 23 Galton imposed his social hierarchical ideas onto distributions of measurable characteristics in a different way than Quetelet did. He favored one ‘high’ extreme over the rest of the distribution. 24 Galton aimed to alter the genetic make-up of the population to increase “intelligence,” height, physical capacity, whiteness, and the like, thus “improving” the population. Galton coined the word “eugenics” to describe this strategy. He advocated for eugenic public policies to encourage some people (high-earning, white, intelligent) to reproduce, such as state-funded incentives for marriage between individuals from high-earning families, and prevent others (poor, disabled, criminalized) from reproducing. Through this work, Galton became widely regarded as the founder of the British eugenics movement. 25 Eugenic policies were promoted in the name of public health and welfare—the life of the population deemed to be the national population would be cultivated, and other kinds of life deemed to be threatening to the national population would be extinguished or encouraged to die off.

To support his eugenic agenda, Galton used a statistical approach to categorize and quantify human characteristics. For instance, Galton assumed that intelligence could be quantified and that quantified intelligence follows a Gaussian distribution. 26 In that system, Galton valued individuals with unusually high intelligence over individuals with average or unusually low intelligence. In Figure 1, this can be interpreted as valuing individuals with quantified intelligence in the upper tail of the distribution and devaluing individuals with quantified intelligence near the mean or in the lower tail. The dominant social interpretation of the Gaussian distribution shifted, indicated by a renaming to the now commonly used “normal distribution.” 27 Rather than depicting an ideal mean and undesirable deviance around that mean, as Quetelet had suggested, the mean began to be considered mediocre and undesirable; one tail was considered extremely unacceptable, and the other tail was considered the preferred state. Galton’s aspirational approach to the normal distribution—his desire to use statistical measure to intervene in human characteristics and change the population “for the better”—is clear in his literal reference to a distribution mean as the “mediocre point.”

This paradigm shift in the interpretation of a probability distribution, from valuing the mean and devaluing the tails, to valuing one tail and devaluing the other tail and common values, illustrates the key role played by political and social interests in interpretations of statistical data. Despite the divergent values they placed on different portions of the normal curve, both Quetelet and Galton used the same fundamental strategy of promoting racist agendas in their interpretations of a probability distribution. 28

Regression Towards the Mean

The social reinterpretation of the Gaussian distribution was just one part of the new quantitative technologies and perspectives emerging with the increasing momentum of the eugenics movement in Europe. Galton and other eugenicists proposed to improve the citizenry of a country, defining improvement as increased intelligence, height, heterosexuality, wealth, whiteness, physical capability, and the like in the population, achieved by altering the genetics of the population as a whole. 29 The eugenic movement called for selective breeding programs where individuals deemed less desirable (people with physical impairments, people of color, people with “deviant” sexuality or gender, poor people, people deemed insane) would be sterilized and individuals determined to be more desirable (white people, rich people, people deemed intelligent, people perceived to be able-bodied, heterosexual people) would be encouraged to reproduce and provided with support from the state. 30 However, eugenic strategies were not limited to selective breeding and sterilization programs, but included anti-union organizing (since unions advocated equal pay to workers deemed to be eugenically unequal), immigration restrictions, and atrophy of welfare. 31 All of this was justified in the name of public health and wellbeing—the life of the population deemed to be the national population would be cultivated, and others kinds of life deemed to be threatening to the national population would be extinguished.

To support eugenic projects, Galton used statistics to explain the heritability of variable traits through genetically related families. 32 Of particular note, Galton’s eugenics agenda inspired the work on trait inheritance in which he first described the statistical concept of “regression towards the mean.” 33 Galton found that the seeds of pea plants whose parents had particularly large seeds are often smaller than the seeds of their parents. Galton then considered a similar phenomenon in humans, finding that the children of unusually tall individuals are typically shorter than their parents and, conversely, that the children of unusually short individuals are typically taller than their parents. 34 He plotted average parent height against average child height, found a line to best fit this data, and described the best-fit line mathematically in an effort to explain and predict height. 35 For these analyses he is credited with inventing regression, a fundamental technique used widely in statistics today to help understand relationships and correlations. 36

Based on the example in the text, the non-heritable random height component for a parent is unusually large (shown in the vertical line labeled parent). Assuming that the parent and child share the same genetic background, the probability that the child of the parent is taller by chance is the area under the curve to the right of the parent, and the probability that the child of the parent is shorter by chance is the area under the curve to the left of the parent.

Galton specifically used the term “regression towards the mean,” similar to his description of the mean as the “mediocre point,” because he believed that the upper tail of a Gaussian distribution (measuring, for example, seed size, height, intelligence, or physical capacity) is the point of aspiration. His observation that unusually tall individuals are likely to have children shorter than themselves represented a literal “regression towards the mean.” This language has persisted in statistics today in “regression analysis,” a linguistic marker of the eugenic origin of this class of statistical methods. Galton’s theory of genetic regression towards the mean over generations, it turned out, was flawed. However, his work was successful in promoting eugenics, regardless of the fact that it was later proved to be inaccurate. 37 The deployment of purportedly scientific or data-based arguments was effective regardless of whether those arguments were internally consistent. 38

To support and facilitate the eugenics movement, new statistical techniques were developed by Galton, Karl Pearson, Ronald Fisher, and others both to analyze complex new genetic data and to predict the parameters of a eugenic program needed to obtain the desired results. 39 The eugenics movement in the United States advocated social reforms that many would today describe as explicitly racist, xenophobic, and anti-poor. 40 For example, the movement promoted legislation limiting the immigration of people from supposedly genetically inferior groups 41 and legislation calling for the sterilization of supposedly genetically inferior individuals. 42 However, social reformers of many kinds mobilized ideas being made popular by eugenics-focused scientists in order to argue that the changes they sought would improve the population and promote the good life. For example, statistically supported eugenic arguments famously received support from white feminists like Margaret Sanger working to improve access to contraceptives. 43

Shifting Eugenic Interventions

Historians of statistics differ in their views about the relationship between eugenics and statistics. Some argue that it is essential to understand that the methods were shaped by their eugenic aims. According to Donald Mackenzie, “eugenics did not merely motivate [the] statistical work [of Galton, Pearson, and Fisher] but affected its content. The shape of the science they developed was partially determined by eugenic objectives. … [E]ugenics was the dog that wagged the tail of population genetics and evolutionary theory, not the other way round.” 44 Francisco Louçã, however, departs from this view, arguing that statistics is the “emancipated heir” of eugenics—that their paths “converged and diverged,” suggesting that statistical methods need not be approached with caution or suspicion related to their eugenic origins. 45 Tukufu Zuberi agrees with Louçã that eugenics and statistics have diverged, but Zuberi asserts that statistical genetics still “supports the use of race as a biological indicator of social difference.” 46

The relationship between statistical methods and the public assertion of a eugenics agenda has shifted significantly since the early development of statistical methods by eugenics leaders. As statistical reasoning and methodology were further developed, it was found that in many cases the eugenic programs promoted by early developers could not achieve their stated goals of altering the characteristics of a population. 47 Over time, especially after World War II, when eugenics became associated with Nazism and genocide, the term “eugenics” and the idea of controlling the genetics of a population using mass sterilization and controlled breeding programs lost popularity. 48 However, reform programs that aim to cultivate national populations according to racial, economic, and sexual norms remain central to contemporary governance and continue to draw on fields of study that are either functionally eugenicist or have been influenced by eugenics. 49 In the 1950s in the United States, eugenics scholars 50 increasingly incorporated ideas about environmental influences, particularly childhood experiences, into their understanding of how to shape the population to meet norms. Rather than promoting the use of state-enforced breeding programs, these scholars produced studies that encouraged individuals to choose their co-parent based on their genetic potential and to provide the “correct” home environment for children, with the goal being to produce normative children. 51 During this same time period, the moral model of disability was replaced by the geneticized medical model and race became increasingly geneticized. 52 These shifts supported the idea that individual decisions regarding family formation were essential to producing genetically superior, i.e., norm-abiding children. 53

Scholars in many fields have critically examined how programs central to the US administrative state operate to establish and enforce racialized gender norms and to forward projects of colonization and population control. They have pointed to many programs and legal and administrative schemes, including Indian boarding schools, welfare family caps, marriage promotion programs, curricula in public education, and immigration regulations that tie immigration status to family, health statuses, criminal history and class. 54 Through norm-enforcing programs aimed at behavior modification and those aimed at changing the biological make-up of the population more explicitly, state administrative capacity that relies on the collection of statistical data using racialized–gendered classification systems are mobilized to produce and/or protect a desired national population. Membership in the national population is distributed and capacities to reproduce are provided or terminated in ways that are significantly racialized and gendered. 55 These kinds of programs are decreasingly described as eugenics by their advocates in the period following World War II, but it would be naïve to assume that a change of terms and certain developments in methodology establish a clean break from the intertwining of eugenics with statistical methods and the racialized–gendered national population control projects they serve. Since the relationship between eugenics and statistics was obscured and buried, many of the statistical techniques developed for eugenics continue to be used without re-examination of the assumptions that undergird them. We are particularly interested in how methods originally designed for population management and normalization are still often used for those same purposes in new contexts.

Relying on the analytical frameworks developed by Ian Hacking, Mitchell Dean, and Michel Foucault, and more broadly in disability studies and women of color feminism, we can see how the intertwining of eugenics and the initial development of statistical methods continues to have effects today. Statistics connect intimate life behaviors and capacities, like those involved in reproduction, to the imperatives of “populations.” Processes that identify and promote the life of a national population by collecting standardized data that constructs who is inside that population and who constitutes a threat to that population are still central to the maintenance and growth of governing capacities, and still operate through the deployment of statistical data. Even if contemporary statistics has moved away from its eugenic origins, the demands of biopolitics continue to influence the scholarly field and the uses of statistics in political and legal discourses.

LGBT rights advocacy demonstrates the connections between demands for formal legal equality, the production of statistical data, and the promotion of narratives of deservingness and undeservingness rooted in racial nationalism. However, the concerns we raise about the mobilization of these investments are not limited to that particular strain of advocacy, but rather haunt many reform agendas. The interwoven strategies of legal equality reforms and the mobilization of statistical data to portray a rights-deserving population are ubiquitous in US social reform today. Social reform advocacy takes “the nation” as its target, and uses these methods to construct that target.

- See Miranda Joseph, Debt to Society: Accounting for Life Under Capitalism (Minneapolis: University of Minnesota Press, 2014): “I take accounting to be, like law, a set of rules and procedures that can and should be subject to critique not only as an instrument of established dominant powers but also as a performative force, a socially formative force” (30).[↑]

- See also Alain Desrosières, The Politics of Large Numbers: A History of Statistical Reasoning, trans. Camille Naish (Cambridge: Harvard University Press, 1998); Mary Poovey, The History of the Modern Fact: Problems of Knowledge in the Sciences of Wealth and Society (Chicago: University of Chicago Press, 1998) (“[I]n most texts that purport to describe the material world, even the numbers are interpretive, for they embody theoretical assumptions about what should be counted, how one should understand material reality, and how quantification contributes to systematic knowledge about the world…. [Yet] numbers have come to seem preinterpretive or somehow noninterpretive… [xii]); and Kim TallBear, Native American DNA: Tribal Belonging and the False Promise of Genetic Science (Minneapolis: University of Minnesota Press, 2013) (“Rather than being discrete categories where one determines the other in a linear model of cause and effect, ‘science’ and ‘society’ are mutually constitutive—meaning one loops back in to reinforce, shape, or disrupt the actions of the other, although it should be understood that, because power is held unevenly, such multidirectional influences do not happen evenly” [11]).[↑]

- Ian Hacking, “Making up People,” London Book Review 28.16–17 (2006): 23–26. See also Catherine Bliss, Race Decoded: The Genomic Fight for Social Justice (Stanford, CA: Stanford University Press, 2012) (examining how contemporary genomic science constructs new racial meanings and “new avenues for identity formation” [19]); and Britt Russert and Charmaine D.M. Royal, “Grassroots Marketing in a Global Era: More Lessons from BiDil,” Journal of Law, Medicine and Ethics, 39: 79–90 (examining the deployment of racial categories in pharmacogenomics and the marketing of pharmaceuticals).[↑]

- Andrea Smith, Conquest: Sexual Violence and American Indian Genocide (Massachusetts: South End Press, 2005); Scott Lauria Morgensen, “Homonationalism: Theorizing Settler Colonialism within Queer Modernities,” GLQ: A Journal of Lesbian and Gay Studies 16 (2010): 105–131, 116; Rifkin, When Did Indians Become Straight; J. Kēhaulani Kauanui, Hawaiian Blood: Colonialism and the Politics of Sovereignty and Indigeneity (Durham, NC: Duke University Press, 2008).[↑]

- See also Desrosières, The Politics of Large Numbers: “Creating a political space involves and makes possible the creation of a space of common measurement, within which things may be compared, because the categories and encoding procedures are identical” (9).[↑]

- James C. Scott, Seeing Like a State: How Certain Schemes to Improve the Human Condition Have Failed, Yale University Press (New Haven: 1998) 32.[↑]

- Hacking, “Biopower and the Avalanche of Printed Numbers,” Humanities in Society 5 (1982), 289.[↑]

- Ibid., 280.[↑]

- In the article “Making up People,” Hacking identifies several engines of discovery which he says are also engines for making up people. The engines he lists are: 1. Count, 2. Quantify, 3. Create norms, 4. Correlate, 5. Medicalise, 6. Biologise 7. Geneticise, 8. Normalize, 9. Bureaucratise, 10. Reclaim our identity.[↑]

- Lennard J. Davis, “Constructing Normalcy: The Bell Curve, the Novel and the Invention of the Disabled Body in the Nineteenth Century,” The Disability Studies Reader, ed. Lennard J. Davis (New York: Routledge, 1997), 9–28. Garabed Eknoyan, “Adolphe Quetelet (1796–1874)—The Average Man and Indices of Obesity,” Nephrology Dialysis Transplantation 23 (2008): 47–51.[↑]

- Frank H. Hankins, Adolphe Quetelet as Statistician, diss., Columbia University, 1908.[↑]

- Ibid.; Eknoyan, “Adolphe Quetelet.”[↑]

- Hankins, Adolphe Quetelet.[↑]

- Hacking, The Taming of Chance (Cambridge: Cambridge University Press, 1990).[↑]

- Eknoyan, “Adolphe Quetelet.”[↑]

- Francisco Louçã, “Emancipation through Interaction—How Eugenics and Statistics Converged and Diverged,” Journal of the History of Biology 42 (2009): 649–684; Hacking, “Making up People”; Hankins, Adolphe Quetelet.[↑]

- Quetelet, Lettres à S.A.R. le duc régnant de Saxe-Coburg et Gotha, sur la théorie des probabilités, appliquée aux sciences morales et politiques (1846), viii, cited in “Adolphe Quetelet,” International Encyclopedia of Social Sciences (1968), Encyclopedia.com.[↑]

- Louçã, “Emancipation through Interaction.” [↑]

- See Figure 1.[↑]

- Davis, “Constructing Normalcy.”[↑]

- Quetelet wrote, “Every people presents its mean and the different variations from this mean in numbers which may be calculated a priori. This mean varies among different peoples and sometimes within the limits of the same country, where two peoples of different origins may be mixed together.” Qtd. in Hankins, Adolphe Quetelet.[↑]

- Davis, “Constructing Normalcy.”[↑]

- Donald A. MacKenzie, Statistics in Britain: 1865–1930 (Edinburgh: Edinburgh University Press, 1981), 15–21.[↑]

- “Galton cast his attention on the differences between individuals, on the variability of their attributes, and on what he would later define as natural aptitudes, whereas Quetelet was interested in the average man and not in the relative distribution of nonaverage men” (Desrosières, The Politics of Large Numbers, 113).[↑]

- MacKenzie, Statistics in Britain, 51–72. Interestingly, along with his work in statistics, Galton also invented the practice of police fingerprinting, exposing the perpetual link between interests identifying and promoting notions of normality and producing categories of racialized criminality. Davis, “Constructing Normalcy.”[↑]

- Davis, “Constructing Normalcy.”[↑]

- Ibid.[↑]

- “For Galton, it was not Quetelet’s “hommemoyen” but the outliers that should concern science: furthermore, for Quetelet, deviations from the norm were pathological, whereas for Galton they were the necessary condition for excellence.” Ian Hacking, The Taming of Chance (Cambridge: Cambridge University Press, 1990). Cited by Louçã at 658.[↑]

- Louçã, “Emancipation through Interaction.”[↑]

- Ibid.[↑]

- Celeste M. Condit, The Meanings of the Gene: Public Debates about Heredity (Madison: University of Wisconsin Press, 1999).[↑]

- Louçã, “Emancipation through Interaction.”[↑]

- Francis Galton, “Regression towards Mediocrity in Hereditary Stature,” The Journal of the Anthropological Institute of Great Britain and Ireland 15 (1886): 246–263.[↑]

- Galton, “Regression towards Mediocrity.”[↑]

- Ibid.[↑]

- MacKenzie, Statistics in Britain, 51–72.[↑]

- Interestingly, Galton mistakenly attributed his observations to a remote ancestral influence. That is, Galton theorized that children of tall parents are sometimes shorter due to inherited shortness from their grandparents, great-grandparents, and so on. Today statisticians explain observed regression towards the mean simply by the shape of a Gaussian distribution. In the case of height inheritance, consider a model where height is explained partially by inherited genetics and partially by Gaussian distributed random variation (Fig. 2). Individuals who are unusually tall are likely to have both a genetic background and random variation contributing to their height. The children of these individuals will share their genetic background, so their genetic contribution to height will give them a tendency to be tall. However, the random component of their height is likely to increase their height less than that of their parents. Since the parents’ random height component was unusually large, the children’s are likely to be smaller, because choosing a more extreme outlier is unlikely by definition. As an illustration, consider a Gaussian distribution (Fig. 2) and one random Gaussian-distributed number happens to be in the upper tail. The chance that the next random number will be greater than the first is proportional to the area under the Gaussian curve to the right of the first number, which is small. Notably, although Galton explained regression towards the mean inaccurately, his ancestral-input theory did support his eugenic agenda (the idea that desired characteristics could be determined precisely through generations of selective breeding), unlike the more scientifically rigorous explanation of random chance (desired characteristics are subject to non-heritable variation, so cannot be totally determined through selective breeding).[↑]

- Condit, Meanings of the Gene, 46–62; MacKenzie, Statistics in Britain, 51–72.[↑]

- Louçã, “Emancipation through Interaction.”[↑]

- The American Eugenic Society defined a partial list of “eugenic problems” which specifically included feeblemindedness, criminality, epilepsy, prostitution, rebelliousness, manic depression, nomadism, ethnicity, inferior races, birth defects, moral perversion, schizophrenia, racial hygiene, homosexuality, immigration, poverty, and feminism. Stephen Jones, “Zoology 61: Teaching Eugenics at WSU,” Washington State Magazine (2007).[↑]

- Eugenicists were key proponents of the Immigration Act of 1924, which severely restricted immigration, particularly of Jews and Southern and Eastern Europeans. Condit, Meanings of the Gene, 3–24.[↑]

- Between 1907 and 1931, 30 states passed laws calling for sterilization of disabled people, resulting in the sterilization of at least 30,000 individuals. Tukufu Zuberi, Thicker than Blood: How Racial Statistics Lie (Minneapolis: University of Minnesota Press, 2003), 58–79; Condit, Meanings of the Gene, 3–24.[↑]

- Condit, Meanings of the Gene, 27–45.[↑]

- Mackenzie, Donald. Statistics in Britain, 1865–1930: The Social Construction of Scientific Knowledge (Edinburgh: Edinburgh University Press, 1981) at p. 12, cited in Louçã, “Emancipation through Interaction,” 650.[↑]

- Louçã, 682.[↑]

- Tukufu Zuberi, Thicker than Blood.[↑]

- Louçã, “Emancipation through Interaction.”[↑]

- Condit, Meanings of the Gene, 65–81.[↑]

- Specifically, in 1957 “crypto-eugenics” was promoted in the Eugenics Society as a strategy to subtly continue to support eugenics through partnerships with other organizations, particularly those working towards accessible birth control. In 1989 the group changed its name to the Galton Institute (http://www.galtoninstitute.org.uk/) and began focusing on funding birth control in poor countries. MacKenzie, Statistics in Britain, 45–46.[↑]

- Even though eugenics was losing popularity after World War II, academic programs and institutes devoted to eugenics continued to exist. Specifically, eugenics courses were taught in public land-grant universities until 1972. Leland L. Glenna, Margaret A. Gollnick, and Stephen S. Jones, “Eugenic Opportunity Structures: Teaching Genetic Engineering at US Land-Grant Universities Since 1911,” Social Studies of Science (2007) 37: 281–296.[↑]

- Condit, Meanings of the Gene, 63–96.[↑]

- Eli Clare, Exile and Pride: Disability, Queerness, and Liberation (Cambridge: South End Press, 1999).[↑]

- Condit, Meanings of the Gene, 63–96; Troy Duster, Backdoor to Eugenics (New York: Routledge, 2003), 3–20.[↑]

- See, for example, Mae M. Ngai, Impossible Subjects: Illegal Aliens and the Making of Modern America (Princeton: Princeton University Press, 2004); Smith, Conquest; Loretta Ross, “The Color of Choice: White Supremacy and Reproductive Justice,” in The Color of Violence: The INCITE! Anthology, ed. INCITE! Women of Color Against Violence (Massachusetts: South End Press, 2006), 54–65; Gabriel Chin, “Regulating Race: Asian Exclusion and the Administrative State,” Harvard Civil Rights–Civil Liberties Review 37.1 (2002).[↑]

- See, for example, Smith, Conquest; Kenneth J. Neubeck and Noel A. Cazenave, Welfare Racism: Playing the Race Card Against America’s Poor (New York: Routledge, 2001); Loretta Ross, “The Color of Choice”; Condit, Meanings of the Gene; Duster, Backdoor to Eugenics; Zuberi, Thicker than Blood.[↑]