February 13, 2014

University of Toronto

From the colloquia series “Feminist & Queer Approaches to Technoscience”

Shaka McGlotten: Why are Black people important? This is how comedian, geek, and author Baratunde Thurston began his 2009 SXSW slideshow, “How to Be Black (Online).” His playful intro, which I have appropriated in its entirety here, led into a more sophisticated, if also still comical, analysis of Black online life.

Thurston noted in particular the waning digital divide. Blacks, he pointed out, were online about as much as Whites, if you count both tethered and wireless access. And both groups tend to use the same sites, with a few exceptions. Thurston also noted the persistence of racism on the web, a point that’s also been underlined by numerous scholars of race and the internet. Thurston focused specifically on the Black use of Twitter, which he links to the call and response game of insults known as the “Dozens.” Of course, tweeting Blacks have caused consternation among some White Twitter users. One remarked, “Wow. Too many negroes in the trending topics for me. I may be done with this whole Twitter thing.”

Thurston’s work on race and technology provides a template for my talk today. Here I engage in a Black queer call and response with a few key areas in culture. I use an eclectic group of artifacts: Thurston’s slideshow, an interview with Barack Obama in the wake of the NSA surveillance scandal, the artwork of Zach Blas, and a music video about technology and gentrification. I do this to proffer the heuristic Black data. This heuristic, I suggest, offers some initial political and analytical traction for Black queer studies to more fully engage with the theories, effects, and affects of network cultures. And although there are some significant bodies of literature in science and technology studies, as well as cultural and media studies, that grapple with issues of race, there are only a handful of issues from Black queer studies itself that really address sexuality and new media head on.

So here I use Black data to think through some of the historical and contemporary ways that Black queer people, like other people of African descent and people of color more broadly, are hailed by big data, through which techniques of race and racism reduce our lives to mere numbers. We appear as commodities, revenue streams, statistical deviations, or vectors of risk. Big data also refers to the efforts of states and corporations to capture, predict, and control political and consumer behavior. In my reading, then, Black data is also a response to big data’s call. And I’m going to offer some readings that outline possible political and affective vectors—some ways to refuse the call or perhaps even to hang up.

Black queer lives are often reduced to forms of accounting that are variously intended to elicit alarm or to direct highly circumscribed forms of care. Statistics are typically used to mobilize people, for example, in the fight against HIV/AIDS—statistics like the fact that Blacks account for 44 percent of new HIV infections in the United States. Statistics are also used to direct attention to the omnipresence of violence in Black life or to the specific forms of violence that are directed against Black LGBTQ people, as in the National Coalition of Anti-Violence Programs 2012 report, which describes how LGBTQ people of color were nearly twice as likely to experience physical violence as their white counterparts and transgender people of color were two and a half times more likely to experience police violence than white cisgender survivors.

Assigning numerical or financial value to Black life, transforming experience into information or data, is nothing new. Rather, it is caught up with the history of enslavement and the racist regimes that sought to justify its barbarities. Between the sixteenth and eighteenth centuries more than twelve and a half million Africans were transported to the New World. Two million, and likely many more, died during the Middle Passage alone. A typical slave ship could carry more than 300 slaves, arranged like sardines, and the sick and the dead would be thrown overboard, their loss claimed for insurance money.

Other more recent data circulates in the wake of the ongoing global recession, or the protest against George Zimmerman’s exoneration in the killing of seventeen-year-old Trayvon Martin. In this period, Black families saw their wealth drop 31 percent. This is just between 2007 and 2010 in the United States. And in 2012, 136 unarmed Black men were killed by police and security guards.

It is tempting, of course, to ascribe these racialized accountings to the cruel systems of value that are established by capitalism, which seeks to encode, to quantify, and to order life and matter into categories of commodity, labor, exchange value, and profit. Indeed, race itself functions as such a commodity in the era of genomic testing. A simple oral swab test can help you to answer Thurston’s question “How Black are you?,” and you can watch others’ reactions on the popular television show Faces of America, a show that’s about genealogical testing and people trying to find their racial or ethnic roots. But as Lisa Nakamura, Peter Chow-White, and Wendy Chun observe, race is not merely an effect of capitalism’s objectifying systems. Rather, race is itself a co-constituting technology that made such forms of accounting possible in the first place.

Race as technology, Chun notes, helps us to understand how race functions as the “as,” how it facilitates comparisons between entities that are classed as similar/dissimilar. As Mel Chen put it in their recent book Animacies, race is an animate hierarchy in which the liveliness and value of some things—say, whiteness, or smart technology—are established via proximity to other things that are positioned lower or further away—like Blackness, or dumb matter. This poster’s tweet, “Wow. Too many negroes in the trending topics for me,” simply reiterates in the realm of microblogging hierarchical techniques of racism that see Black people as polluting, and therefore as distasteful or dangerous, or that would deny information and technology to the subjects of discrimination.

Of course, all of the statistics that I have just gone through are probably familiar, at least to some of us. And while useful, they tell only very partial stories, and they tend to reduce Black life to a mere effect of capitalism or to a kind of numerology of bare life. So in what follows, I’m going to sketch a few different trajectories for what I’m calling Black data. I’ll enact Black data as a kind of informatics of Black queer life, as reading and throwing shade to grapple with the NSA surveillance scandal, new biometric technologies, and the tech-fueled gentrification of San Francisco. These readings, which are perhaps obviously also actings out, also help to illustrate the ways that Black queer theories, practices, and lives might be made to matter in relation to some of the organizing tensions of contemporary network cultures, privacy, surveillance, capture, and exclusion.

Black queers help to frame what is at stake in these debates insofar as we quite literally embody struggles between surveillance and capture, between the seen and unseen, between the visible and invisible. Moreover, queers of color and people of color more broadly have developed what others have called rogue epistemologies, which often themselves rely on an array of technological media which might help to make us present or to disappear. In the readings that follow, I also gesture towards the virtual infinities that Black queer theoretical or political projects might share with cryptographic and anarchistic activism.

In a popular song, a song made popular in the last year, Zebra Katz, a Black queer rapper, says “Ima read.” So I’m going to read Obama’s face. Obama was raised by white people, not drag queens, but he knows how to give good face. But in the moments before a recent interview with PBS’s Charlie Rose, Obama’s signature smile cracked, revealing instead an ugly mask. And this mask held a tense set of ironies. The United States’ first Black president defended the unprecedented expansion of the National Security Agency’s surveillance programs to include the collection of the metadata of millions of Americans’ and global citizens’ telephone and email correspondence. He accused Edward Snowden, a former NSA contractor turned whistleblower, of spying, and called for his arrest, continuing a pattern of aggressively prosecuting leakers of governmental overreach.

The racial melodrama is striking. A Black man authorized the capture and arrest of a young White man, Snowden, who by revealing the spying program directly challenged the hegemony of US imperialism, a project that has historically been and is presently tied to the control, domination, torture, kidnapping, and murder of Brown and Black people around the world. In this instance, Obama’s grin is a failed mask, or it is the slippery gap that hosts the mask before its radiant, populist actualization.

So in the interview, Obama was at pains to tell Charlie Rose that in fact the NSA was not doing anything illegal, that the processes that were being undertaken were akin to just getting a warrant for a wiretap, when in fact none of this was true. And he also made a point to say that metadata, data about data, is not about personal content, it’s not about the content of the material—which is also quite false.

How many of you guys have seen this before, this kind of image? It’s a program called “Immersion” that was developed by a professor at MIT. It’s just one example of a data visualization program. And in this case what it does is—you can go online and find it and use it yourself. It provides a visualization of your metadata in your—in this case, in my—Gmail correspondence. You don’t know very much about me, but these clusters and these circles represent the people that I’ve been in correspondence with. So just to take maybe a best guess, which of these circles represents my intimate partner?

Audience member voice: [inaudible]

Shaka McGlotten: Yeah. Okay. Current husband. And what do you think about my ex? Who is my ex?

Audience member voice: The small one.

Audience member voice: No. The other blue one, right?

Shaka McGlotten: The other big blue one, yeah. That’s right. There’s mom, there’s colleagues. There’s a whole cluster of ones that are related to my work at my institution.

So you can see just from this very simple image that in fact metadata can reveal quite a lot about a person’s most intimate relationships. And you know, this kind of metadata use—ongoing revelations are showing that the uses to which it’s put are actually diverse. One of the first articles that Glenn Greenwald and others published on the new site the Intercept pointed out that the NSA’s same metadata collection techniques have been used or are being used in the drone assassination programs across the Middle East.

In another era, and maybe still in this one, Obama’s grin might embody the racist fantasy that all Black people are animated by a desire to please or reassure White people. But here the mask is a more familiar code. It’s just a politician’s lie. Don’t worry. Everything’s fine. Carry on.

Although very brief, Obama’s expression arrested my attention, an attention that is shared with other queers and people of color, one that is always attuned through calibrated and diffused looks, speculations and modes of attention to what Phillip Brian Harper has called “the evidence of felt intuition.” These are the subtle or not subtle gestures that might indicate shared desire in a cruising space or the threat of violence in a public one. My cynical intuition, “Ima Read,” also collides with nostalgia for a scene of optimism. I cannot help but juxtapose Obama’s rictus grin with Shepard Fairey’s famous portrait of Obama gazing into the distance. To this juxtaposition we can add a meaning that emerged in the wake of the NSA scandal.

For Giorgio Agamben and Emmanuel Levinas, faces condition our ethical encounters with one another. Agamben writes, “Only where I find a face do I encounter an exteriority and does an outside happen to me.” In the work of Deleuze and Guattari, however, the face is something more ambivalent. It is operationalized as a regulating function whose origins lay in racism. For their concepts, what they call “faciality” determines what faces can be recognized or tolerated. The Dozens and Black queer reading practices, in particular, are uncanny inversions of this concept. So rather than serve to hierarchically ordered bodies and to viscous clumps, to dominate by comparison to a [inaudible] or a norm, reading Obama’s face through the Dozens might yield a comic finality. You’re so ugly even [inaudible] says good-bye. Or your smile is such a lie not even your White mother would believe you. But Obama’s grimacing mask is not merely a sign to be decoded, a truth to be unveiled. A read is a punctum but is also always an invitation, a salvo and a call and response.

Edward Snowden unmasked himself in part because he believed that by stepping out from the veil of anonymity, by revealing his identity, by giving face, he might affect some degree of control over the representation of his decision to confirm the unprecedented scale of the NSA’s programs and also encourage others to come forward. In addition, and unsurprisingly, he believed that his anonymity might in fact endanger him, making him vulnerable to the kidnapping, torture, and murder that he knew the US government was capable of. By coming forward or coming out, Snowden curiously mimicked some Black and queer practices, which can mix a performative hyper-visibility, an awareness of one’s difference and visibility, with invisibility or opacity and indifference, or even hostility, to the norm or to being read.

James Baldwin, riffing on Ralph Ellison, expressed it somewhat differently in a 1961 interview in which he linked Black visibility and invisibility to whiteness: “What white people see when they look at you is not visible. What they do see when they do look at you is what they have invested you with. What they have invested you with is all the agony, and pain, and the danger, and the passion, and the torment—you know, sin, death and hell—of which everyone in this country is terrified.”

Snowden had gone stealth for years, passing as a mild-mannered analyst, keeping his civil libertarian streak on the down-low. Then he appeared, carried it, and vanished. And as of this writing he is, of course, in Russia, where he’s been granted temporary asylum while the US continues to bully other nations into denying him egress. Yet Snowden’s face, like that of recently convicted army whistleblower Chelsea Manning, now appears on the placards of thousands of protestors around the world. Their faces have become both screens and masks. Their faces stand in for or project a generalizable face—my face, your face, all of our faces. And increasingly, as at a recent protest in Berlin, the faces of these figures are themselves worn as masks, barring access to an individual or specific face while calling into existence a shared or collective one. These faces or masks make dual demands: transparency from the government, opacity for the rest of us.

In her study of the transatlantic performances of Black women, historian and performance studies scholar Daphne Brooks uses the concept of “spectacular opacity.” She does this to retool colonial tropes of darkness which have, in her words, “historically been made to envelop bodies and geographical territories in the shadows of global and hegemonic domination.” Like some uses of masks, or among transgender folk going stealth, darkness and opacity have the ability to resist the violent will to know, the will to transparency. Martinican critic and poet Édouard Glissant says that a person has a right to be opaque. And this is a point that is echoed in different contexts by cypherpunks like Julian Assange and the activist group Anonymous. For Assange, Anonymous, and others, such as the Electronic Frontier Foundation, developing cryptographic literacies in this political moment is essential. This might involve using a browser like Tor, using a virtual private network, an array of browser add-ons, and secure file transfer services, among many other techniques.

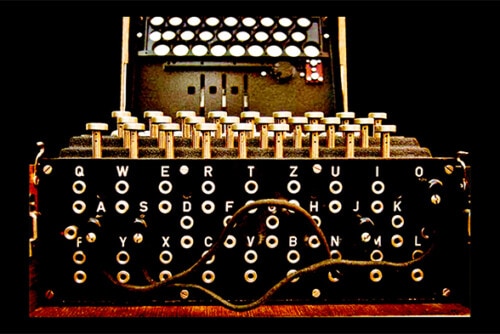

Encryption transforms information into codes that are unreadable by anyone without the proper cypher. And learning how to make oneself opaque is a practical necessity and a political tactic in this moment of big data’s ascendancy, in which clickstreams, radio frequency ID tags, the GPS capacities of our cellphones, close-captioned television, and new biometric technologies are employed by states and corporations, sometimes together, to digitally log our movements and every technologically mediated interaction. Yet states and corporations have made encryption more difficult in recent years, sometimes describing cryptographic tools as weapons and encrypted communications as threats to national security.

So how can citizens challenge state and corporate power when those powers demand that we accede to total surveillance and at the same time criminalize dissent? How do we resist such demands, when journalists, whistleblowers, activists, and artists are increasingly labeled as traitors or terrorists?

Network theorist Alex Galloway provides some conceptual and political starting points in an essay he calls “Black Box, Black Bloc.” In the essay he considers the way the black bloc, an anarchist tactic of anonymity and massification, and the black box, a technological device for which only the inputs and outputs but not the contents are known, collide in the new millennium. The black box provides a model for the individual and collective black bloc to survive. Using an array of technological and political tools, we might turn to black boxing ourselves in order to make ourselves illegible to the surveillance state and to big data.

To resist the hegemony of the transparent, we—the we, I imagine, here is loosely comprised of people who might be opposed to the unholy marriage of militarism, corpocracy, manufactured consent, and neoliberal economic voodoo—we’ll need to embrace techniques of becoming dark or opaque in order to better become present in the ways that we want, but without being seen or apprehended.

So we too can employ masks that lie, but like unlike Obama’s mask, these masks might help to produce a sense of camaraderie. In the black bloc, or among the real-world protests organized by Anonymous and others, a mask makes people anonymous while also representing a shared collectivity. And this is evidenced in the ways that the Guy Fawkes mask that has been popularized by Anonymous is increasingly taken up in context around the world, as in the student protests over the last few years in Quebec or the ongoing protest movements in Europe, Brazil, and elsewhere. In these contexts, masks operate as part of a new politics of opacity, a form of Black data that helps to make identities or identifying information go dark or disappear, while at the same time hailing an incipient multitude. Such a multitude has also been reflected in mobilizations that occurred in the wake of George Zimmerman’s acquittal, in which protestors appeared in hoodies and declared, “I am Trayvon Martin.”

Reading Obama’s mask, or trying to decipher his real character or intentions, invites us to reflect on our own desires for transparency, for knowing or settling on the truth in an era in which transparency and the truth are always already staged by the NSA, Google, Apple, Microsoft, and Facebook, but also by our bosses, colleagues, students, parents, friends, and lovers. In this context, masks can offer a layer of protection rather than hide a real essence. A good mask, one resistant to efforts to decode it, might in fact provide us with a little bit more room to maneuver in our controlled society. Speaking in an overlapping context, the philosopher Jacques Derrida discussed the cryptography of some texts, describing their unyielding secrecy as an experience that does not make itself available to information, that resists information and knowledge and that immediately encrypts itself.

While the collection and interpretation of metadata, data about data, has become increasingly sophisticated, other racial and sexual profiling techniques remain crude. Currently, most of these techniques rely on the simple visual apprehension of another’s difference. One obvious example would be Stop and Frisk, the New York City police program that began in 2002 and that resulted in more than four million stops, nearly 90 percent of which involved Blacks and Latinos. The wanton murder of queers of color is of course another.

Recent work in biometrics and the study of movement foreshadow new technologies for capturing and recording the body. Recent scientific research in facial recognition and facial-cue recognition, for example, some of which actually has been done—a great deal of which actually has been done—here at the University of Toronto, some of this recent scientific research provides a basis for a biometrics of queerness. On the surface, the research gives credence to the concept of “gaydar.” A series of studies by a professor here at U of T named Nicholas Rule and others showed that people are able to make very fast, above-chance judgments about sexual orientation. How soon might these abilities be coded into facial recognition software? Just how difficult might passing become?

Christoph Bregler, a professor of computer science at NYU and director of its Movement Lab, is among the leaders of this new research. In an interview with NPR on the media in the wake of the Boston bombing, he described some of these coming technologies. And he began by noting his ability to identify other Germans while walking on the street in New York City. He said that he could hear a very barely audible snippet of someone’s speech; he could notice something about how they walked or how they moved their body; and together these things contributed to nearly subliminal processes of recognition and identification. He used this personal example to describe problems with identifying the Boston bombing suspects. The surveillance camera footage that was taken during the bombing demanded that law enforcement spend many, many hundreds of hours combing through the material to look for the suspects. But Bregler believes that this work can, should, and will be totally automated. In the interview he claimed that his own research teams can already identify national identity with more than 80 percent accuracy.

So Bregler imagines a world in which these technologies are more widely available and automated, making the identification of criminals or terrorists, or just the rest of us, much easier for law enforcement. But there are also a number of critics of these new biometric technologies, and two of them are Shoshana Magnet and Simone Brown. They address some of the many problems with these approaches to biometrics, and they emphasize in particular the ways that these biometric technologies tend to reproduce social stereotypes and inequalities. Magnet notes the ways that biometrics work differently for different groups. Many biometric technologies, for example, rely on very false ideas about race, such as the association of particular facial features with racial groups. And these technologies also tend to reproduce the marginalization of transgender people, who are in many cases unreadable by these technologies. Simone Brown, meanwhile, links contemporary biometrics to histories of efforts to create identification documents that shape human mobility, security applications, and consumer transactions. And she also, in a very important essay, links the history of ID documents to histories of racial surveillance technologies, such as slave passes and patrols, wanted posters, and brandings. So with biometric technologies, details about a person’s life, their experience, their embodiment, are coded as data that does not, strictly speaking, belong to them and are put to use by states and corporations in ways that they may have little control over.

Magnet and Brown thereby underscore some of the ethical dilemmas that are related to social stratification and intellectual property in relationship to biometric technologies. They and others also describe the ways these technologies represent a desire for unerring precision and control, a kind of technological fantasy that in fact can never be achieved. But this fantasy of control is actually paradoxically bound to the idea that new technologies will help us be free.

Importantly, the control that is represented, or the fantasy of control that is represented, by these automated processes is also not infallible. They regularly fail. Magnet’s work in particular underscores biometric failures, and she argues that biometrics do real damage to vulnerable people and groups, to the fabric of democracy, and to the possibility of a better understanding of the bodies and identities these technologies are supposedly intended to protect.

How many of you have registered your biometric data with Canada, so that when you travel in and out of the country you just go up to the little iris recognition scan? No one wants to claim it? I saw people coming in when I arrived yesterday, and the thing that I kept hearing in the background is that people moved up to the machine and they stood close to it and they moved away and they tried to make it work, but sorry, we—you know, “we”—at this time we cannot read your information. The machine was constantly failing.

In some recent and upcoming projects, the artist and theorist Zach Blas offers creative hacks that disrupt new biometric technologies of the face. His collaborative project Facial Weaponization Suite—it’s also sometimes known as Fag Face Mask—contests the ideological and technical underpinnings of face-based surveillance. In this community-based project, masks are collectively produced from the aggregated facial data of its participants. His Fag Face Mask responds directly to the studies about facial-cue recognition that I just mentioned, and the project offers ways to induce failures into these technologies. In the project he uses facial data from queer men—this includes gay men, but also transgender men—to create a composite that is then rendered by a 3D printer. The resulting mask is a kind of blob—and you sort of see it at the bottom left here—one that is essentially an unreadable kind of topography of the face. And thus far he’s printed two of these. I think he’s working on more, and also these face cages. Two masks have been printed, one pink and the other black.

In the video documentation of the work, which self-consciously echoes the aesthetics of the videos released by Anonymous, a figure wearing the pink mask describes the ways biometric technologies seek to read identity from the body, reproducing in the process the notion that one could have a stable identity at all. And a few moments into the video, the mask itself, now a pulsing animation, recounts the failures of biometrics.

Female voice: Biometric technologies rely heavily on stable and normative conceptions of identity, and thus structural failures are encoded in biometrics that discriminate against race, class, gender, sex, and disability. For example, fingerprint devices often fail to scan the hands of Asian women and iris scans work poorly if an eye has cataracts. Biometric failure exposes the inequalities that emerge when normative categories are forced upon populations.

Facial-recognition technology has become a pervasive, popular device for biometric surveillance, a thriving, rapidly developing part of our new surveillance culture. Facial-recognition techniques now range from algorithms that extract landmarks on faces, such as cheekbones, noses, eyes, and jaws, to 3D programs that map the shape of a face, to various forms of skin-texture analysis. Typically, faces are collected in databases to compare and search against for a variety of possible criminal activities. For example, in its 2000 presidential election, the Mexican government used facial recognition to prevent voter fraud. In 2001, Tampa Bay police used facial-recognition software to search for criminals and terrorists during the Super Bowl, finding nineteen people with pending arrest warrants. Within the last year, Occupy activists and Afghan civilians have been the target of massive biometric data-gathering sweeps by US police and military forces. The ubiquity of facial recognition now spans from London’s massive CCTV network to the German federal criminal police office, Facebook’s facial-recognition auto photo tagging, Apple’s iPhoto and iPhone, Google’s Picasso, US Homeland Security, and the US Department of State, which has the largest facial-recognition system in the world—over 75 million photographs for visa processing. We also commonly experience facial recognition and detection now with our digital cameras that locate faces and even smiles.

Later on in the video, Blas goes on to ask, what are the tactics and techniques for making our faces nonexistent? How do we flee this visibility into the fog of a queerness that refuses to be recognized? Fag Face Mask uses masks and the aggregated faces of fags as weapons in order to evade or escape capture. Explicitly linked to the masked communal figures of the black bloc, the Zapatistas, and Anonymous, Blas’s project invites us to share in what he calls an era of deliberate mystery, an opaque queer fog.

Resisting biometric technologies of the face, or introducing disruptions into this field of surveillance, might also result in yet more extreme approaches among law enforcement. As Blas observes in this video, Occupy activists and Afghan civilians alike became the object of biometric data collection unwillingly, and the NYPD has criminalized the wearing of masks in public and at public protests, something I think that has also happened here in Canada in the wake of the protests in Quebec. Recently, the Washington Post also revealed that in the United States more than 120 million people had been unwittingly added to facial-recognition databases when they went to obtain their driver licenses.

Techniques of refusal such as the anonymous massification vis-à-vis masks are unevenly available. So there are some for whom flight from these technologies may simply not be possible or for whom it may be forced. For example, becoming clandestine or deserting these surveillance regimes are not really options for populations that are already subject to highly spatialized forms of surveillance and control. The Stop and Frisk program, for example, takes place almost entirely in Black and Brown neighborhoods, a context in which people yearn to escape police violence and harassment, but where efforts to evade surveillance or to contest it only result in heightened forms of scrutiny. Hoodies and baggy pants or mascara and glitter are already sufficient to attract many violent forms of attention. In this context, young Black and Brown men, as well as queers, might be better served by technologies that might help them to pass, that might make them white. A dark wish that is encoded in the song that I turn to next.

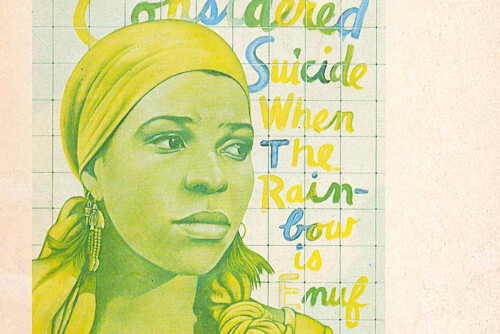

The Black Glitter Collective’s 2013 music video “Google Google Apps Apps” is an angry lament for the death of queer San Francisco. Latina drag queen Persia, together with collective members and Daddy$ Pla$tik, work it to an up-tempo beat, but the song itself is pretty depressing.

If the first two sections of this talk have been concerned with the relationship between masks and the political possibilities represented by a kind of darkness, darkness as opacity, a darkness that I’m calling Black data, this last section of the talk will employ this concept of Black data in a different way. Here I’ll focus on the material effects that those companies whose business is data have had on the spatialization of dark or unseen people. And I’ll focus in particular on the ways that queers and people of color have been forced to flee the gentrifying processes that have occurred in the wake of San Francisco’s most recent tech boom. So here, Black data takes the form of what I’m calling Black Ops—an angry, ambivalent, even masochistic, queer of color response to the entwined logics of white supremacy and the so-called values of the high-tech industry.

The Black Glitter Collective has penned the song, Google Google Apps Apps : https://www.youtube.com/watch?v=5xyqbc7SQ4w

Persia wrote this song in response to the stress of her imminent unemployment. She was about to lose her job at SFMOMA, and Esta Noche, the gay Latino bar where she performed in San Francisco, was having trouble paying its bills. In the song she responds to the most recent wave of tech-fueled gentrification in San Francisco, which has resulted in astronomical increases in rent. The median rent for a one-bedroom apartment in the city is now nearly $3,000. Unsurprisingly, these increases have disproportionately impacted already vulnerable populations: queers, people of color, and grassroots activist nonprofits.

New technologies seek to transmute the base matter of bodies into code, into digital forms of information that are intended to enhance communication and sociability, as well as, to borrow a category from Apple’s apps, our productivity. But they thereby also aid in the biopolitical management of populations, while at the same time profiting corporations like Google, Apple, Facebook, and Twitter—giants in a region that is also saturated with other smaller and mid-sized businesses, as well as the venture capitalists who support them. And in the song we can see some of the results of their success without requiring any kinds of complicated decryption algorithms. This is just addition and even us humanists can do it. These corporations reap record profits; they work with city governments to bring jobs to the region; and they develop needy city districts, then rents go up. “SF, keep your money, fuck your money,” shouts Persia. The song underscores the material effects that the growth of digital technologies and economies have had on real-world spaces—in this case, the forced exodus of the peoples and cultures that helped to make San Francisco a political and creative laboratory, that made it home to so many freaks, artists, and sexual adventurers.

In his now famous account, Richard Florida noted the appeal of quirky, diverse cities to high-tech companies, forward-looking entrepreneurs, and to what he called the creative class. He did not, however, account for the ways that the immigration of white-collar creatives and geeks tended to fundamentally alter the very things that made those destinations so appealing in the first place. So Persia’s resentment, like that of the working class more broadly, does not figure into any of his analyses. In a recent discussion about Silicon Valley’s recent awkward, and usually quite selfish, forays into politics, the New Yorker’s Jeffrey Toobin observed that the technology industry has transformed the Bay Area without being changed by it. In a sense, without getting its hands dirty.

Importantly, the song’s refrain links these processes of gentrification and displacement as well as the underlying ideologies and practices of neoliberal capitalism to whiteness. Persia and her crew sing, “Google, Google, apps, apps, gringa, gringa, apps, apps, I just wanna-wanna be white.” The technological giants that aim to connect people everywhere are intimately tied to new surveillance regimes, something that the song acknowledges early on when Persia tells her audience “Twitter. Twitter me. Facebook. Facebook me.” But they are also linked to white privilege and to class domination. The new arrivals to San Francisco, these gringos and gringas, terms that in this context refer to English-speaking non-natives, reproduce the violent fantasies of white manifest destiny. In this white manifest destiny, they imagine themselves to be bringing civilization in the forms of design-savvy gadgets, tweets, instant picture sharing, cat videos, augmented reality, biometric tagging, commercial data-mining, and apps for everything. They are bringing these things to the unwashed hippies and queers of San Francisco, as well as to the billions of needy people around the world. Although they might give the impression of sometimes being insensitive, if comments like “adapt or move to Oakland” are any indication, they don’t have it out for anyone in particular. They’re rational, self-evident social actors. Self-evident because their motives are pure and transparent: technological mastery, professional achievement, economic success, white-collar comforts like living in the Bay.

Their privilege is evident, as well, in the alarm and discomfort that they feel in the wake of recent protests against their presence in the Bay Area. In a much-discussed Twitter image, protestors at an anti-gentrification event smashed a piñata of a Google bus, a papier mâché avatar of the cushy, luxury bus shuttle service that Google provides to its San Francisco employees. The buses, which are also used by other companies, transport workers to the Google offices and are equipped with high-speed Wi-Fi so they can stay connected 24/7. So they can optimize their productivity, their workflows. So they can gossip or just sleep en route to work without ever having to mix it up with the masses.

In her book The Shock Doctrine, Naomi Klein talks about the growth of red and green zones in cities around the world. This is sort of like in Iraq—you had a Green Zone, where the Americans were like, everything is fine, and everywhere else in Iraq nothing was fine. And this is actually happening in cities all over, where you have what are essentially forms of class-based apartheid that will separate the haves from the have-nots.

In the video, Daddy$ Pla$tik members Tyler Holmes and Vain Hein reflect on their own chances for becoming white. While linking bleached assholes to whiteness is a comical jab at the occasional craziness of white culture and its sexual hang-ups, the wish that underlies the chorus “I just wanna-wanna be white” is nonetheless very powerful and real. Whatever their political orientations, few people of color and few queers of color can escape the lure of whiteness. Who doesn’t want to be beautiful, rich, and white? Who doesn’t want to possess technological, financial, and social power? Who doesn’t want to control space? Who doesn’t want to escape the darkness of poverty, violence, and exclusion? Why wouldn’t one opt instead for translucency, transparency, and technological control and mastery? As Venus Xtravaganza put it in Paris Is Burning, “I would like to be a spoiled, rich, white girl.” The song ambivalently expresses the same wish. First, it articulates an erotics to gentrification, one that mixes sexual desire with domination through the ironic lyrics that link BDSM play to gentrification vis-à-vis an ode to Madonna’s famous song, “Gentrify my Love”—“Justify My Love,” sorry. That’s the change. “Techies take the Mission. Techies gentrify me, gentrify me, gentrify my love.” Secondly, however, it underscores the kind of grotesquery that results when the cast-outs, the freaks, and the queers of color do try to become white. So near the end of the video, Persia and her crew smear a white paint stick over their faces and don blonde wigs, while Vain Hein adds a lead Bowery-style doll mask to his already clownish white face. The chorus now takes on another meaning, one that makes the violence of the wish and the impossibility of its realization more palpable. “I wanna-wanna be white,” but obviously I never will be, not if that means having money or the ability to influence the shape of particular technologies or urban spaces, rather than be the target consumers of high-tech firms or the chaff that cities are trying to cull in their obsequious efforts to please high-tech companies.

Of course, this critique of the marriage of high-tech, gentrification, and whiteness is struck through with many ironies. Persia wrote the lyrics to the song on her phone. I wrote this paper on an eight-year-old Mac Book Pro, in case you were wondering. The video required obvious and considerable manipulation and technical skills to produce. It appeared on YouTube, a Google property. And it’s been widely circulated on Facebook and Twitter, where I found it. All in an effort, I think, to achieve some traction in network culture, in which traction equals attention equals hits equals, Persia hopes, some form of financial remuneration, maybe just enough to help her put down a deposit on her new East Bay gigs. In the comment section of the video an inevitable troll remarked on some of these ironies: “Interesting that the lyrics of the song that’s so hostile to the tech industry were written on a phone.” To which another poster sarcastically replied: “It’s interesting how all those blacks wanted out of slavery even though they got free food and homes.”

The desire to become white is a shadow that haunts the lives of queers of color, and perhaps the most common reaction to such a wish would be to deny it or to try to deconstruct it. But following queer theorist Jack Halberstam’s recent work on what he calls shadow feminisms, I wonder if the urge to challenge the logic of whiteness in ourselves, the internalized and not-so-internalized violences that are engendered in all of us by white settler colonialisms, does not itself reproduce another set of violences—for racial authenticity or purity, for example. Instead, what might happen if we embrace those forms of darkness in which identity is obscured or rendered opaque? There would be no coherent, rational, or self-knowing subjects here, just furious refusals. These refusals are what I mean when I talk about Black Ops, a form of Black data that encrypts without hope of a coherent or positive or affirmative output. Queers and people of color might tactically redeploy Black Ops as techniques of masking secrecy or evasion.

So rather than follow the logic of the Black Hole—the name of a government contractor, electronic warfare, and associates’ wireless-traffic intercept tool, one of the many kinds of crazy technologies that are being developed to intercept and take our information—the Black Ops that I’m describing would imagine a world in which our identities and movements could become our own, opaque to the securitized gazes of states and corporations. New Black Ops technologies might help us all play out our masochistic fantasies of becoming white or becoming animal, becoming other, or just pure, private becoming.

In the song, Persia embraces a darkness that responds to the antisociality that is engendered by the tech giants with an ambivalent queer antisociality in turn. Her face morphs and masks. She affirms that yes, I want to be white like you. Twitter me. Facebook me. Gentrify my love. I’ll become white, jerky, stumbling, angry, cruel. Her crew’s transformation parodies the awkwardness and un-sublimated violence of white supremacy and at least some white people some of the time. Their Black Ops is both a refusal and a rearticulation of the stuck frustrations that activists, queers of color, and others feel in the wake of gentrification and neoliberal economic policies more generally. This ambivalence is even present in their name, the Black Glitter Collective. Black is for mourning or encrypting, and glitter is for queer fun, but it gets everywhere.

In this talk, I’ve tried to enact a form of Black data that’s different from discussions of Black life that would reduce it to lists of bare accountings which are incomplete and would misleadingly suggest that Black life must always and only be subordinated to historical and contemporary traumas or victimization. Instead, I’ve tried to cultivate a notion of Black data tied to defacement, opacity, and encryption. Black queer reads can shame a face, in this case that of Obama’s, and they can also articulate an opaque, encrypted point of view, one that resists being fully apprehended or made transparent. Throwing shade, in the context of drag and Kiki Balls, for instance, does not require any specific enunciation to deliver an insult. Rather, it uses looks, bodily gestures, and tones to deliver a message that might be at once very clear—like, “that is so ratchet”—but also open-ended and sneaky. I didn’t say anything because I didn’t have to.

So in this talk, I’ve suggested that such Black queer practices which I figured here as Black data or as Black Ops might be usefully brought to bear on our discussions about network culture, especially those that are related to surveillance and the relationship and impact of communication technologies on spaces and mobilities. I have, moreover, sought to ally the political possibilities of a Black queer conception of Black data with some of the orientations of anarchists and cryptographers. I hope that these perhaps unlikely alliances might yield new, creative, and viable forms of Black Ops, encrypted forms of reading and refusal that are imbued with a dark optimism toward the present, in which political and corporate interests collude to produce an ever-expanding web of ruin, a vast system of surveillance and capture that seeks to transform all of us into code.

Thank you.